This Might Be Why OpenAI Spent $6 Billion on Jony Ive’s Team

Artificial Intelligence is reshaping the tech world at lightning speed. From changing how we search online to generating images and videos from a few prompts, A.I. has revolutionized software. But one piece of the puzzle is still missing: a true physical form.

Despite years of innovation, A.I. still largely exists in apps—think of ChatGPT or image generators—without a standout physical gadget to call its own. That’s something OpenAI, the leading A.I. lab, now wants to change.

OpenAI’s $6.5 Billion Move into Hardware

Last week, OpenAI CEO Sam Altman revealed that the company will acquire a start-up named I0, co-founded by former Apple design chief Jony Ive. The $6.5 billion deal is more than just a business transaction—it’s a bold step toward developing “a new family of products” for the A.I. era.

Jony Ive, famous for designing the iPhone, is partnering with OpenAI to explore what A.I. hardware might look like in a post-smartphone world. The plan is to craft devices that offer a richer, more intuitive way for people to interact with A.I., moving beyond typing or tapping on screens.

From iPhones to "Galactic" Dreams

In an interview, both Altman and Ive stayed tight-lipped on specific product ideas but described ambitions that sound almost sci-fi: "galactic" innovations designed to “elevate humanity.”

“We’ve been waiting for the next big thing for 20 years,” Altman said. “We want to bring people something beyond the legacy products we’ve been using for so long.”

Their collaboration aims to move beyond smartphones and laptops to create ambient computing devices—wearables or glasses that process the world in real-time using A.I.

Funding, Focus, and a Shared Vision

To fund the venture, OpenAI will buy out remaining shares in I0. OpenAI already held a 23% stake from a previous agreement and will now spend around $5 billion to take full control. Ive’s design firm LoveFrom will continue working with OpenAI, while also taking on other projects.

Despite concerns about costs, Altman shrugged off funding worries. “We’ll be fine,” he said. “Thanks for the concern.”

The duo's partnership blossomed from a personal connection—after Ive's son introduced him to ChatGPT, Ive became fascinated by A.I. and reached out to Altman. They quickly bonded over shared ideas for human-centered design and technology.

Building a New Interface for A.I.

This isn't Ive’s first try at rethinking tech devices. He previously supported Humane, a start-up attempting to launch an A.I. pin, which didn’t find success. But this time, with OpenAI’s resources and Ive’s unmatched design pedigree, hopes are high.

Interestingly, Ive has expressed regret over some consequences of the smartphone era. “I shoulder a lot of the responsibility for what these things have brought us,” he said, referring to the overload of notifications and digital distractions.

That’s why this new mission feels personal. “I believe everything I’ve done in my career was leading to this,” Ive said.

A Future in Form

OpenAI made waves in 2022 with the release of ChatGPT, then raised $40 billion in March 2024. But now, the focus is shifting from pure software to tangible, physical products—ones that could redefine our daily relationship with A.I.

As Silicon Valley giants explore what comes next, this collaboration between Jony Ive and Sam Altman might just be the blueprint for the A.I. device of the future.

“I Believe Everything I've Done Was Leading to This”

Jony Ive recently said, “I believe everything I’ve done in my career was leading to this.” That’s no small statement from the man who helped design the Newton MessagePad, iMac, iPod, iPhone, iPad, Apple Watch, and even Apple Park. His career has been a continuous journey toward making technology more personal, intuitive, and seamlessly integrated into our daily lives.

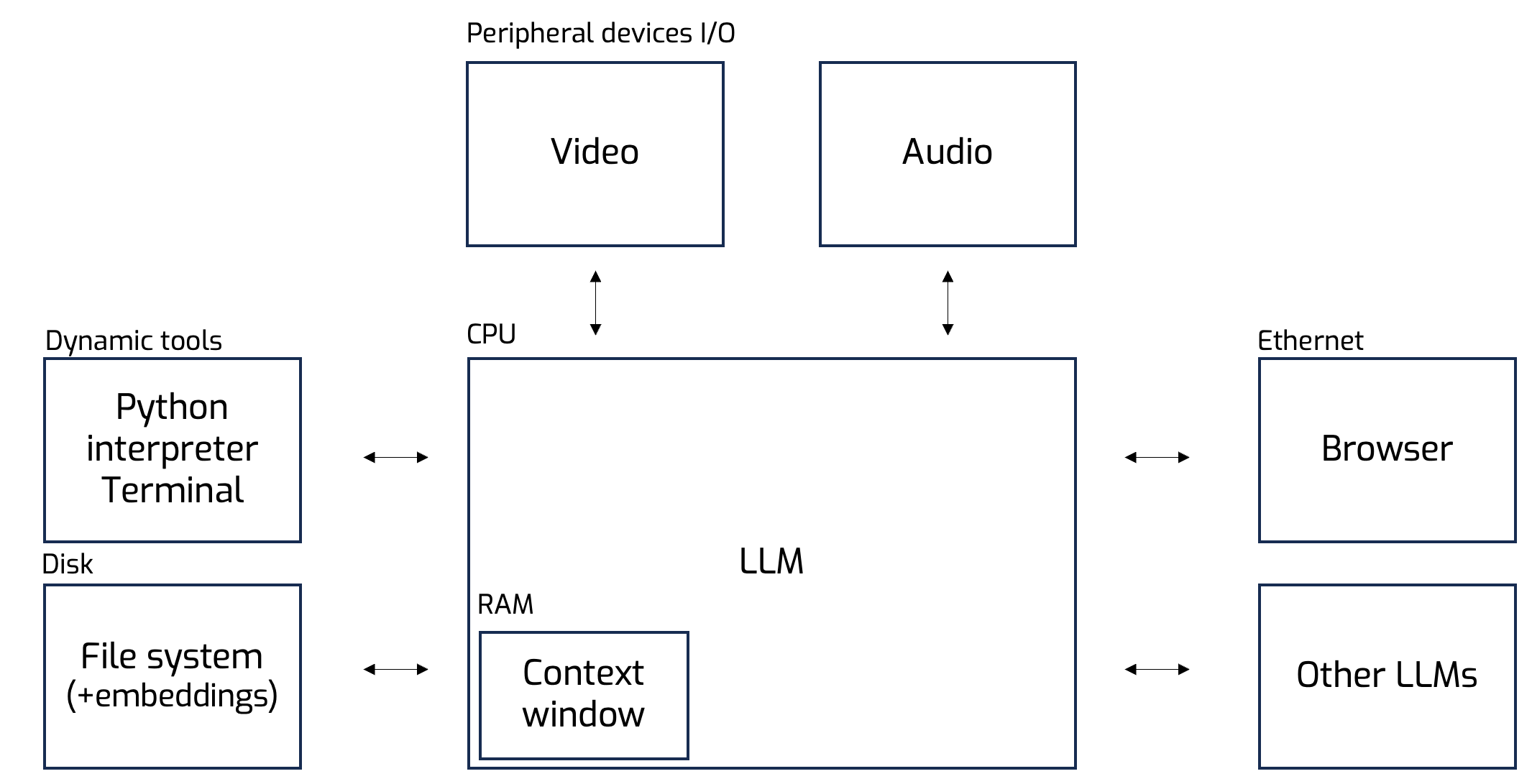

That’s why it makes perfect sense that Ive’s next act would be to help design a device powered by an LLM—what might be the spiritual successor to the iPhone. But instead of apps, this new device will be built around an LLM OS: a large language model at the center, interfacing with video, audio, browsers, other LLMs, and classical computing tools like calculators, file systems, and Python terminals.

A Glimpse Into the Future: LLM as the Core of the Device

From the diagram above, I see a vision of a device that no longer relies on traditional app structures. Instead, it uses a large language model as the operating system—capable of:

- Reading and generating text, images, video, and sound

- Acting as a calculator, programmer, or researcher

- Speaking and listening in natural language

- Remembering long-term context via embeddings

- Even “self-improving” in specific reward-based domains

- Browsing the web intelligently and in real-time

- Communicating with other LLMs

In essence, it becomes your personalized co-pilot for the digital world. And that brings us back to Jony Ive’s core design philosophy: make technology disappear into the background, so what remains is the experience.

My Take: The Next Leap in Human-Machine Interface

Based on Ive's past work and the LLM OS vision, here's what I believe we’ll see:

- Form factor: A sleek, always-on device. Possibly wearable—like a pair of AR glasses or an evolved version of the Apple Watch.

- Interface: Strongly voice-driven. With advancements, perhaps even thought-driven interfaces are on the horizon, as hinted by brain-computer interface patents using EarPod-style sensors.

- Functionality: No apps. Just conversation. It might know you well enough to predict intent, not just respond.

- Hardware-LLM fusion: Not just embedding an AI into a device, but co-designing hardware and AI as a single system.

Ive spent his career obsessing over the edge between form and function. Altman is pushing the frontier of intelligence itself. Together, they’re likely building not just another gadget—but a new kind of relationship between human and machine.

LLMs have the potential to make sense of the chaos we’re currently living in. We’re in a time of "FRAYING"—where every new need leads to yet another app, yet another tool. This fragmentation has made our digital lives complex, noisy, and disjointed. But LLMs, especially when acting as the operating system, offer the ability to form a cohesive framework—a BRACKET, a unifying layer that wraps around all of this and makes it coherent again. It’s the next level of generalization in computing. And with that, there’s a real chance to pull all these scattered functions back into one device. I believe that's exactly what will emerge from Mr. Ive’s next creation.

And if they succeed? It may not just replace your phone. It might redefine what a “computer” even means.