The Future of Work in an AI World: Why Trust, Integration, and a Dash of “Fairy Dust” Will Keep Humans at the Center

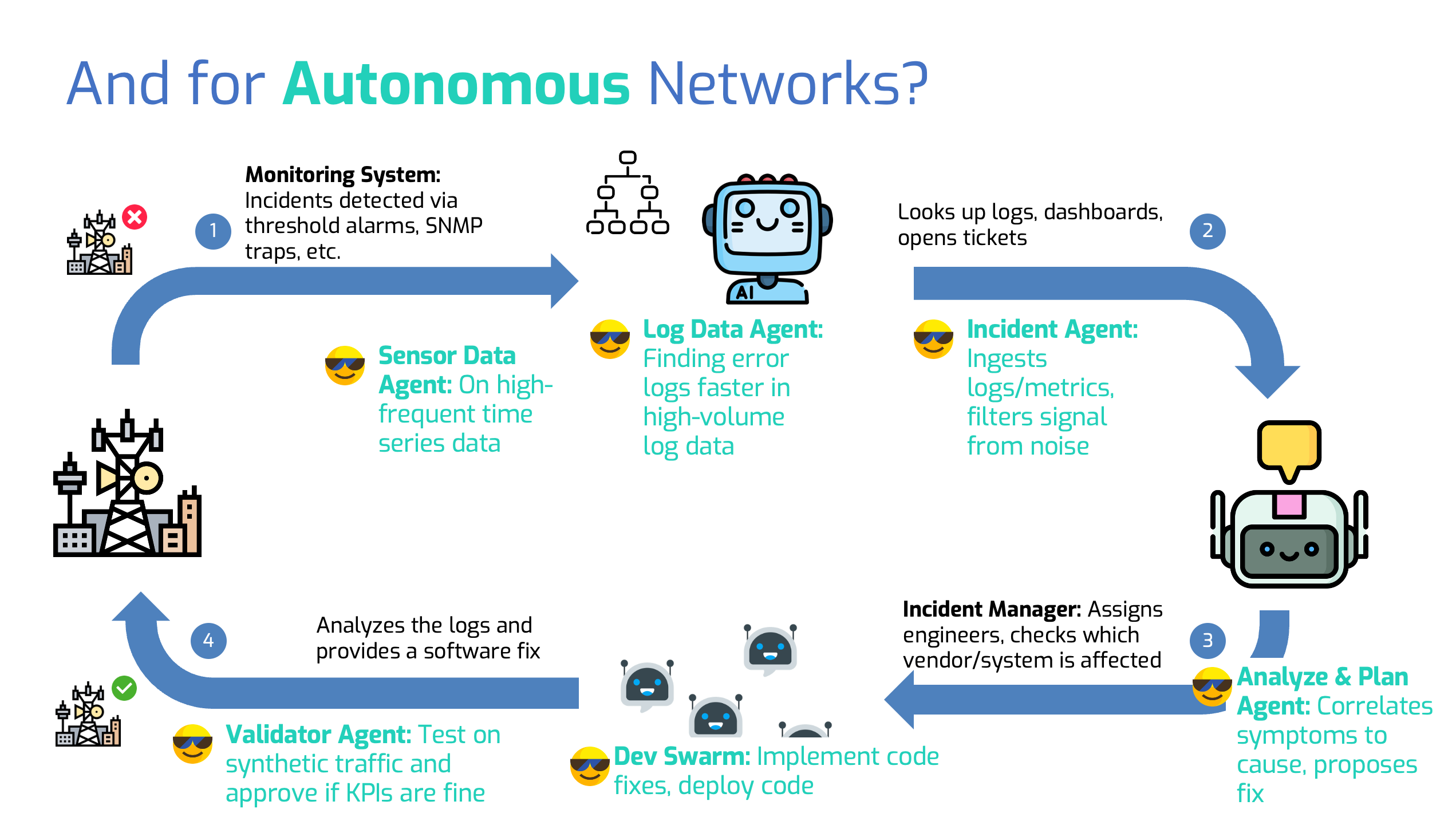

A few weeks ago, I stood in front of a telecommunication provider’s team, presenting an idea I’ve been passionate about: autonomous networks. Inspired by how manufacturing uses prescriptive maintenance to detect and resolve flaws, I envisioned networks that could autonomously detect incidents using agents, process sensor data, and solve problems through automated coding.

The concept was compelling, yet one thing became clear as I spoke: even the most advanced automation leaves critical gaps where human expertise is indispensable. Google’s recent breakthroughs demonstrate this perfectly (Migrating Code At Scale With LLMs At Google, Ziftci et al., https://arxiv.org/abs/2504.09691). They managed to reduce integration effort by 75% with AI. Impressive, right? But here’s the catch—something as trivial as replacing a 32-bit variable with a 64-bit one still takes a year to reliably implement. Why? Because finding every instance, understanding dependencies, and ensuring nothing breaks requires more than brute force—it requires context.

This mirrors the argument made in a recent New York Times article, which explored what kinds of jobs AI might create, even as it replaces many others. The author identified three areas where humans will remain essential: trust, integration, and taste. Let me expand on these, with examples that illustrate why they matter.

1. Trust: The Human-in-the-Loop Factor

Trust is not a feature you can code; it’s something you earn and maintain. Even as AI becomes more sophisticated, businesses and individuals need humans to take responsibility, verify accuracy, and provide accountability.

Take my own experience with technical document translation. Large Language Models (LLMs) are powerful, and they can outperform many traditional translation tools on metrics like BLEU scores. Recently, our internal product UltraScale has been evaluated regarding that. But when it comes to domain-specific accuracy, they often falter. Translating a legal or engineering document is not just about language—it’s about correctly handling every symbol, sign, and nuance. A mistranslated ≥ as ≤ can have serious consequences. That’s why we need a human-in-the-loop to review and ensure correctness. Over time, AI can improve, but the responsibility still lies with humans.

The NYT article highlights roles like:

- AI ethicists – professionals who set and enforce guidelines on how AI should be used responsibly.

- AI auditors – specialists who evaluate AI decisions to ensure fairness, accuracy, and compliance.

- Human safety supervisors – particularly in industries where errors could be catastrophic, humans are needed to double-check AI outcomes.

These roles reinforce that trust requires human oversight, especially when the stakes are high.

2. Integration: The Hidden Complexity

Integration is one of those invisible tasks that only gets noticed when it fails. It’s about making different systems work together, adapting to legacy environments, and handling edge cases AI might overlook.

Google’s struggles with code refactoring illustrate this perfectly. While AI tools can automate many coding tasks, complete automation still struggles with understanding context across interconnected systems. Integration isn’t just coding—it’s orchestration, prioritization, and risk management.

The NYT article mentions several emerging roles here, and I would add one critical function: the Evaluation Specialist. Evaluation and accuracy are not binary—they’re a cost-versus-risk decision. Sometimes, a company may accept lower quality if the risk is minimal, while in other cases, the highest precision is non-negotiable. Human specialists will calibrate this trade-off based on the company’s budget, industry requirements, and risk profile.

Other roles include:

- AI workflow designers – experts who create frameworks that allow AI to plug into complex workflows.

- Human-machine teaming managers – professionals who optimize collaboration between human workers and AI agents.

- Data curators – roles focused on preparing and integrating high-quality data so AI systems function correctly.

These positions highlight that while AI is a powerful tool, humans remain essential for balancing precision, cost, and business strategy.

3. Taste: Creativity and the “Fairy Dust” Factor

And then there’s taste, or as I call it in German, Feenstaub—that sprinkle of magic that sets great ideas apart. AI can generate endless possibilities, but it lacks the subtlety and intuition to know what will truly resonate.

When I wanted to name my company ultra mAinds, I initially turned to AI for suggestions. It came up with a name I liked. But when I Googled it, I discovered a company in a similar field already using it. That’s when I realized: AI can suggest, but it can’t differentiate like a human can. The final touch—the Feenstaub—had to come from me.

The NYT article points out roles where human creativity is the deciding factor:

- AI-assisted creatives – artists, designers, and writers who use AI as a tool but bring the unique vision only humans possess.

- Cultural curators – professionals who ensure that AI outputs align with human cultural values and aesthetics.

- Innovation scouts – people who identify and apply novel, out-of-the-box ideas that AI might not even consider.

To this, I would add a new and crucial role: the Differentiation Designer. In today’s VUCA (Volatile, Uncertain, Complex, Ambiguous) world, markets evolve faster than ever. Opportunities emerge suddenly, and those who can adapt quickly and serve new profit pools will lead. Differentiation Designers will combine creativity, market awareness, and strategic thinking to shape products, services, and experiences that stand out. AI can generate options, but humans decide what truly makes a difference—and they do so with a speed and nuance that AI cannot replicate.

My Take: The Real Risk Will Be Losing Our Own Feenstaub

However, my real takeaway is this: AI is incredibly powerful because it can replay, remix, and create within its boundaries—producing countless unseen combinations and solutions. But it is still limited to the patterns and data it has been given. Feenstaub—the spark of true differentiation—will always come from humans.

The biggest risk I see is not AI itself, but how we use it. If we rely on it too heavily, we risk dulling our own creative edge. Studies already suggest that excessive use of social media and tools like ChatGPT can reduce our cognitive effort, making us less inclined to think deeply or independently. If we become too dependent, we may lose the very creativity that AI cannot replicate. That, to me, is the greatest danger: not that AI will take Feenstaub away, but that we will stop providing it ourselves.